MotionComposer workshop at Dämeritzsee, Berlin

The creative part of the MotionComposer team was gathered for four days at the Dämeritzsee Hotel in December 2018 - lying next to a beautiful lake - for a kind of "hackathon" workshop, aiming to develop the 3.0 version further through long days of work.

The Dämeritzsee, just outside of Berlin

The new tracking software using stereoscopic camera input was now much more developed, and could deliver tracking data of quite good quality for many of the core parameters. For my part, it was the first time I could try the new version of Particles with the new tracking system, and we were happy to experience that the central features of the sound environment worked well. There were some issues with certain parameters being too "trigger-happy", so this is something to be further improved in the tracker.

As for the features of the Particles sound environment, we decided to include arm hits to the side, kicks and jumps to it. I briefly made some sketches with glissanding sound particles that had some interesting qualities, and these will be developed further for the final version, especially giving them a sharper attack. Here are some hits followed by a few kicks:

Sketches for hits and kicks for Particles for MC3.0

I also spent a bit of time preparing a work flow for making rhythms for Particles, including making a sequencer interface in Max that could make a file that could be immediately loaded into csound. Here is a screenshot of the 8 track, 64-step sequencer:

8-track, 64-step sequencer for Particles, built in Max

We concluded the workshop with setting milestones for the further development of Particles in the spring 2019.

Stocos workshop and artist talk

As a part of the ARTEC seminar series, I had invited Stocos, a Spanish/Swiss artist trio creating interactive dance pieces, to do a two-day workshop and an artist talk with demonstration. The trio consists of Pablo Palacio, composer and interactive music instrument designer, Muriel Romero, choreographer and dancer, and Daniel Bisig, visual artist, sensor technician and programmer. The group combines research into embodied interaction, artificial intelligence, and simulation of swarm behavior with artistic exploration of music, visuals and dance.

Stocos (Pablo, front left, Daniel front center, Muriel, far right) with workshop participants

The workshop took place at DansIT's (Dance in Tr ndelag) studio at Svartlamon in Trondheim. It took place as a collaboration between ARTEC and DansIT, where the former covered the expenses related to travel and accomodation for the group, and the latter offered me their venue free of charge as a recidency. A total of fourteen participants (including me as the organizer) took part in the workshop, even if some were not present at all times. The group consisted of people with very varying backgrounds: composers, musicians, dancers, actors, media/video artists, stage designers, dramaturgs, philosophers and music technologists. Of the participants, eight of them were either employed or students at NTNU and another three had relatively recently achieved their degrees (Master/PhD) at NTNU and were now working in the art sector in Trondheim. Due to restrictions in the number of people, there were additional applicants to the workshop on a waiting list.

The first workshop day started with a presentation of the workshop holders in Stocos, their artistic aims and statement, and then continued with an overview of their work during the last 10 years or so. A core concept of the group, which is also reflected in their name, is the use of different types of stochastic algorithms, both at signal-level (in the form of noise) and in different ways of mapping movement parameters with visuals and sound. In addition, different ways of applying swarm properties in especially mapping and visual projections were central. This was applied for the first time in the piece with the same name as the group, where the swarms were first of all visible as minimalistic visual projections, mostly in black and white, showing either moving entities or trajectories of invisble moving entities - interacting to larger or lesser degrees with dancers. Another work, Piano-Dancer, was an exploration of the same core principles of stochastics and swarms, but here a single dancer's movements and algorithms were controlling a motorised piano. In the piece Neural Narratives, they were creating virtual extensions of the dancing body with computer visuals, with tree-like entities following the movements of the dancers while also having a semi-autonomous character. Lastly, they presented their most recent work, The marriage between Heaven and Hell, based on the literary work of William Blake. In this work they continued their work with interactive control of voices, this time using phrases from Blake's poem. Particular to this work was the use of Laban derived bodily expressive aspects, such as energy, fluidity, symmetry or impulsivity, translating them (in real time) into audible and visual digital abstractions. This piece also featured the use of body-worn interactive lasers, where the movements of the dancers controlled whether they were active of not. The presentation spurred a lot of questions and discussion about everything from technicalities to aesthetic issues.

The second part of the first workshop day was more technical and focused on the different types of sensors the group used. They started showing technology they have used in earlier years, namely the Kinect. Using the open source driver implemented in EyesWeb the sensor gave them access both to the countour and the skeleton of the dancer. Even if the Kinect had relatively high latency and the skeleton tracking was quite buggy, they still had used it In recent years. More and more, however, they had moved to IMUs for their tracking. They used a Bosch/Adafruit BNO055 connected to an arduino with wi-fi shield, with everything put in a 3D-printed case, and then put in an elastic band - attached to the arm or the lower leg. This avoided several of the problems associated with video-based tracking (occlusion, IR light from other sources, etc.), even if there were specific challenges associated also with the IMUs. The IMUs also offered absolute orientation data in 3D, making it possible to track the position and rotation of the extremities, not only their movements. Finally, the group showed us their most recent development, namely sensor shoes, with seven pressure sensors in the sole.

Daniel Bisig explaining the construction of the sensor shoe.

After just having monitored the values from the sensors in visual graphs, the last part of the first workshop day focused on the mapping on the values from the sensors to sound. Here, the participants got to try out the IMU bands and the sensor shoes with several different mappings - synthetic singing voices, sampled speaking voices, stochastically modulated noise, and several others.

The second day of the workshop started with a movement session led by Romero with focus on exercises related to Laban's theories of individual choreographic spaces around the dancer, and how these could be divided into planes cutting in all directions around a dancer. Many of the exercises directly articulated these planes.

After the movement session, the focus was moved to the computer generated visuals. Most of these were in some way or another derived from swarm simulations, and Daniel Bisig spent quite some time explaining how these were programmed and manipulated so as to create different visual expressions. Among other things we got to see how using the individual agents' traces as visual elements along with the manipulation of pan and zoom of the space they inhabited created a multitude of different patterns and effects. Some of the participants also got to try out an interactive algorithm that used the contour around the body to attract the swarm in such a way that they delineated the user's silhouette. We also got to see how the swarms could articulate letters more or less using the same technique, and how the dampening of attraction forces made the contours around the letters less distinct.

Workshop participant Elena Perez trying out the interactive swarm visuals.

There was also time to try out the interactive lasers (with sound) from The marriage between Heaven and Hell, which was, naturally enough, very popular. All the lights were turned off, and a hazer was activated, so that the trajectories of the lasers were visible. The lasers all had lenses spreading the beam vertically about 30% so that the result was a line when it was projected on the floor. We also got to see how moving the lasers in different ways generated different effects, like "scanning" and "strobe". We then did further tryouts in the darkened space - now with the sensors controlling stage lights (via MIDI/DMX) together with transformed vocal sounds. Even if the lights had a significant latency, it was nice to see how they could be mapped from the IMU sensors.

At the end of the workshop, Stocos presented longer extracts from several of their pieces, to answer the question that many participants had posed: How do you put it all together? We then got a much better understanding of the artistic contexts and intentions the different technologies and mappings were used in - and further how their artistic expressions could be transformed with different techniques.

All in all, the impression was that the workshop participants were highly inspired by the workshop, and several of them talked about getting new artistic ideas. Stocos were also impressed with the engagement that the participants showed. Several people also expressed a wish to have Stocos back to Trondheim to do a full performance.

Artist talk at Kunsthallen

Monday the 26th of November Stocos gave an artist talk at Kunsthallen as a part of the ARTEC seminar series. Around 15 people attended. Stocos presented their artistic aims and statement, and then continued with an overview of their work during the last 10 years or so. In between the discussion of the different pieces, Muriel Romero demonstrated the sensor instruments used in the different performances. The artist talk lasted 90 minutes.

Muriel Romero demonstrating the sensor shoes. Daniel Bisig is watching.

Student concert at Planetariet

As a part of the course Electroacoustic Music - Analysis and Composition that I teach at NTNU, five master's students at the music technology programme got to perform their newly composed pieces at the Planetarium of Vitensenteret (The Science Centre) in Trondheim November 16 2018. The concert took place as the first milestone in several people's joint efforts of transforming the stunning 36.2 channel sound system, custom made for planetarium shows, into a concert venue for multichannel/spatial music. NTNU Music Technology, with myself and PhD candidate Mathieu Lacroix, composer Frank Ekeberg and padagogue at Vitensenteret, Lars Pedersen, have been researching to find a setup that can work for multichannel presentation of electroacoustic music. After quite a bit of experimentation during the fall of 2018, we ended with a solution where we used Blue Ripple Sound's plug-ins to play music in 3rd order ambisonics through the Reaper DAW running on an external laptop connected to the IOsono system via Dante. At the concert, the students presented compositions they had made as a part of the course, but adapted to the 36.2 setup at Planetariet. The concert was concluded with Ekeberg's piece Terra Incognita from 2001. The concert program can be read here. It is my intention to publish a blog post later in the winter with details about the setup to help others arrange multichannel concerts at Planetariet.Panel at RITMO Workshop

The newly started center for interdisciplinary studies in rhythm, time and motion, RITMO, hosted a workshop to celebrate their opening November 12th-14th 2018: RITMO International Motion Capture Workshop.I was invited by the chair of the event, Alexander Jensenius (UiO) to participate in a panel about interactive music systems together with John Sullivan (McGill University), Cagri Erdem (UiO) and Charles Martin (UiO). Each of us got to give a short presentation of our work, before we sat down to have a panel discussion, also involving the audience. In my introduction, I presented the particular challenges of creating interactive music systems for people with different abilities in my work with MotionComposer.

In the workshop there were several panels like this, with topics like Music Performance Analysis, Music-Dance Analysis, Music Perception Analysis and Different Types of Motion Sensing. There was also one whole day dedicated to different tools (MoCap Toolbox, MIRToolbox, EyesWeb, and more). High points in the program were the three keynotes by Marc Leman (Ghent University), Marcello Wanderly (McGill University) and Peter Vuust (Aarhus University). We also got a tour of the RITMO center, showing off the motion capture lab, eye tracking lab and more.

Panel about motion capture tools at the RITMO International Motion Capture Workshop

Sound design workshop at Fusion Systems

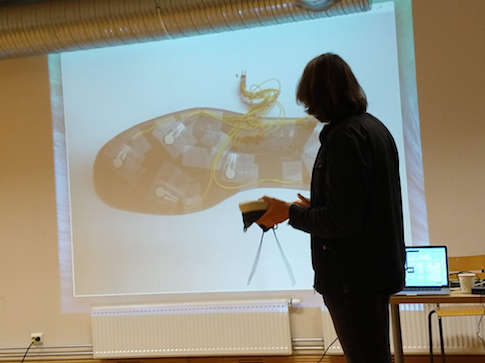

October 8 - 11 2018 the German company Fusion Systems, a company working mainly with computer vision and sensor fusion for traffic and industry applications, hosted a workshop together with Palindrome Dance Company. The workshop aimed at developing sound design for interactive music applications for people with different abilities. I was invited to the workshop together with Marcello Lusanna and Ives Schachtschabel to work on sound design and meaningful movement-sound mappings. During the workshop we got to try out a work-in-progress prototype of a stereoscopic motion tracking system.

Stereoscopic motion tracking prototype at the Fusion Systems workshop.

Panel at Knowing Music, Musical Knowing

Knowing Music, Musical Knowing was a conference and International Music Research School hosted by my university NTNU and Grieg Research School October 23rd-26th. On the website of the event, it was described in the following manner: "This event will explore the complex and multifarious connections between music and knowing. Bringing together key thinkers and practitioners in contemporary interdisciplinary music studies – including but not limited to dance studies, music therapy, ethnomusicology, music technology, historical musicology and music education – this four-day school will inspect both the unique forms of knowledge embodied through musical practice and the different ways humans create and impart knowledge about music."

Myself together with Elin Angelo, J rgen Langdalen, Simon Gilbertson and Kari Holdhus.

For this event, I was asked to participated in a panel about the challenges and possibilities for musical knowledge transmission, learning and education between music disciplines. The panel consisted of professor Elin Angelo, Head of Department of Music, NTNU, J rgen Langdalen, associate professor of music therapy, Simon Gilbertson, associate professor Kari Holdhus from H gskolen Stord/Haugesund, and post doctor Nora Kulset.

In my five minute introduction I briefly discussed how common denominators in the form of passion, listening and musicking bodies can allow us to build understanding, communicate, as well as improve learning, across disciplines. The whole introduction can be read here.

Music Tech Fest, Stockholm, September 2018

Music Tech Fest (MTF) is an annual event gathering artists, professionals, hackers and academics for a few days to work in an intense and stimulating environment. This time it was KTH in Stockholm that had taken on the event, with the main event happening September 7-9 2018.I was very happy to be invited to participate in the #MTFLabs, which was an extended part of the whole thing, going on for a full week, Monday - Sunday. In the Labsa total of 80 artists, hackers and acedemics were gathered with a mission of putting on a 45 minute show at the end of the week. Everything took place inside a shut down nuclear reactor 25 meters below ground.

The reactor hall at KTH. Photo by Mark Stokes.

The whole thing was kicked off by presentations by Michela Magas, the founder of MTF, Danica Kragic, Airan Berg and pop singer Imogen Heap. Then, during a process of self organization, we split off into different groups and sub groups, according to what we wished to work with in terms of technology and artistic expressions.

I soon found a good connection with Joseph Wilk (UK), a creative (live)coder and computer wiz, and Lilian Jap (SE) a media artist and dancer, and we decided to form a group under the theme of paralanguage. Later, the group was completed with Kirsi Mustalahti (FI), an actress and activists, working with arts for disabled people through the Accessible Arts and Culture (ACCAC) organization.

Me with Lilian Jap, Joseph Wilk and Kirsi Mustalahti

Inspired by the post-apocalyptic feeling in the reactor and theme of extra-terrestial colonization that was circulated in the whole of the #MTFLAbs group, we decided to make a piece about having to re-invent language through paralanguage and body movements. Joseph discovered a really nice speech data base with Enligsh accents, featuring almost 2700 sound files with a single statement being spoken by different speakers from from all over the world. The database was a nice metaphoric expression of "humanity" that we felt was a good fit with our idea. Our initial idea was then to use a wavenet encoder - a machine learning technique working on the audio sample level - to train a model that we could control with the Kirsi and Lilian's movements. However, it turned out that this took a lot more time than we had at our disposal.

With the sample database downloaded we at least had a rich source of sounds, that also had a nice consistency. Joseph was then able to organize the material so he could play it using his live coding setup, and this worked so well that we kept it in the performance for the build-up in the final section.

Without a trained model and an interface to the parameters of the database, it was difficult for me to create an instrument that could play the whole database. I therefore chose to use only a subset of the samples (about 100 of them), that I stretched and layered in different ways using Csound. We put an NGIMU sensor and a Myo sensor armband on each of the dancers, and they were then mapped so as to control each of their individual set of voices. We then structured the piece in two sections, the first one (discovery and first contact) dwelling on two single syllables "plea" (from please) and "call", with each syllable being played by one dancer, and the final part (beginning communication developing into chaos), building up textures and layers of an ever increasing amount of words and syllables played by dancers and Joseph's live coding. The piece ended after about 4:30 with a sudden freeze of movements and silence, whereupon a comprehensible version of the speech material was presented to the audience: "Please call Stella. Ask her to bring these things with her from the store" - a highly mundane statement rounding off the somewhat existential thematics.

Here is a video of the first section of one of our performances (we had three in total). It is shot by Mark Stokes:

We all agreed after the performance that our work together had a lot of potential, both in terms of artistic development and application for people with other abilities, so we have put in several applications for further work with the project called Accents in Motion.

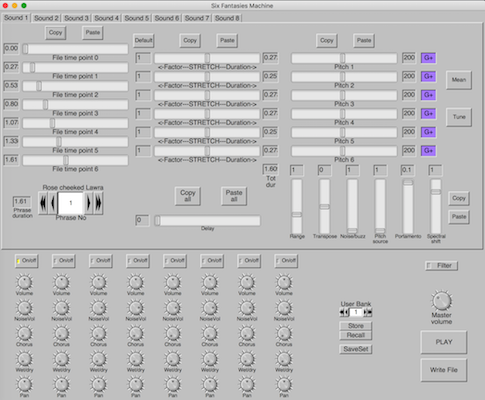

Running version of Six Fantasies Machine

The Six Fantasies Machine (SFM) instrument is a tool developed in Csound for producing a range of sounds similar to those found in Paul Lansky?s classic computer music composition Six Fantasies on a Poem by Thomas Campion. I made it as a part of my PhD project, Experiencing Voices in Electroacoustic Music, which was finished in 2010. Due to changes in Csound, for many years I couldn't run the software on the recent versions, which was a bit sad. However, I have now (October 2018) gone through the code again and got it up and running on Csound 6.12 on OSX.

For download, info and sound examples, check the SFM project page.

Startup of VIBRA - network for interactive dance

After having worked with interactive dance over some years mostly outside of Norway, I found it could be interesting to gather people in my local region to do some work here. I was lucky enough to get a grant from the Norwegian Culture Council, which made it possible to arrange a series of three workshops in Trondheim with dancers/choreographers, video artists and a music tech colleague during the winter/spring of 2018.

The network was named VIBRA, an Norwegian acronym which translates into Visuals - Interaction - Movement - Space - Audio. The project now has its own website at www.vibra.no where we have put information about the project and a blog about technical as well as artistic issues.

VIBRA is a network of artists and technologists who explore artistic expression possibilities related to video, interaction, movement, space and audio - in short form, often referred to as interactive dance. With interactive dance, we mean a cross artistic expression in which dancers using sensor and computer technology control one or more aspects of sound, music, video, light, or other scenic instruments. This has hitherto been largely unexplored in Norway. Much of the non-classical dance presented on Norwegian scenes today is accompanied by pre-recorded musical events and / or video. With such a fixed accompaniment, the relationships that occur between motion, music and visual elements will work essentially one way. By using new technology, we hope to introduce an interactivity that can provide a more dynamic expression that more closely puts cross-cultural relationships at stake. The start-up of the network will involve a bundle of people from Trondheim / Tr ndelag that have a relevant background and will constitute the project group.

Here is a short excerpt from the showing of the last workshop, using NGIMU and Myo sensors with four dancers and projecting sound over a 8-channel setup:

The video excerpt shows dancers Tone Pernille stern and Elen yen.

Presentation at IRCAM Forum Workshops

The IRCAM Forum Workshop is an annual event in which IRCAM Forum members get to share and discuss ideas. As they write on their website, "The Ircam Forum workshops is a unique opportunity to meet the research and development teams as well as computer music designers and artists in residency at IRCAM. During these workshops, we will share news on technologies and expertise providing hands-on classes and demos on sound and music."

As an IRCAM Forum member I made a submission with Robert Wechsler entitled MotionComposer - A Therapy Device that Turns Movement into Music, and it was luckily accepted and the presentation was held the 8th of March 2018. It was a great experience to show our work at this renowned institution in front of a highly competent audience. And of course, we also greatly enjoyed to see presentations of the IRCAM teams as well as the other IRCAM Forum members.

Abstract

The MotionComposer is a therapy device that turns movement into music. The newest version uses passive stereo-vision motion tracking technology and offers a number of musical environments, each with a different mapping. (Previous versions used a hybrid CMOS/ToF technology). In serving persons of all abilities, we face the challenge to provide the kinesic and musical conditions that afford sonic embodiment, in other words, that give users the impression of hearing their movements and shapes. A successful therapeutic device must, a) have a low entry fee, offering an immediate and strong causal relationship, and b) offer an evocative dance/music experience, to assure motivation and interest over time. To satisfy both these priorities, the musical environment "Particles" uses a mapping in which small discrete movements trigger short, discrete sounds, and larger flowing movements make rich conglomerations of those same sounds, which are then further modified by the shape of the user's body.